Apple, in collaboration with Carnegie Mellon University, has developed a system called ARMOR, designed to enhance humanoid robots’ ability to perceive their surroundings. The ARMOR system utilizes advanced depth sensors and artificial intelligence to improve collision avoidance and navigation in complex environments.

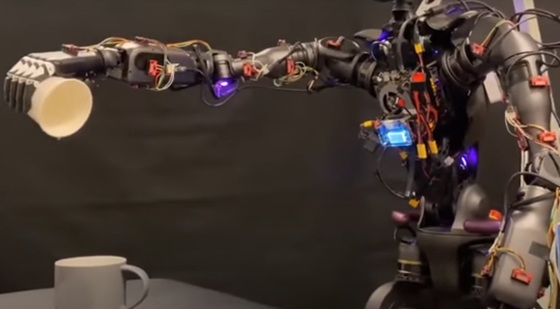

ARMOR employs Time-of-Flight (ToF) sensors strategically placed on robots’ arms to provide detailed environmental awareness. This setup allows robots to detect obstacles and achieve nearly complete situational awareness. The system integrates transformer-based AI, which adapts by learning from human movements, enabling robots to dynamically plan and adjust their actions.

In testing, ARMOR demonstrated a 63.7% reduction in collision incidents, significantly enhancing robots’ ability to navigate crowded or intricate spaces. Additionally, the ARMOR-Policy system reduces computational time by a factor of 26 compared to conventional methods, allowing for faster decision-making.

This technology is expected to improve the safety and efficiency of humanoid robots in various applications, from industrial settings to public interactions. The project builds on Apple’s history of innovation in autonomous systems and reflects its broader ambitions in robotics and AI development.